What Kirkpatrick and Moore Teach Us About Real Impact

Since officially stepping aside from a clinical nursing position in Intensive Care, I’ve spent most of my non-clinical career immersed in education, professional development and workforce development. Over the years, I’ve become increasingly obsessed with one question:

How do we know education is actually making a difference?

It’s easy to capture data on training completion. It’s essentially taking the box that staff have attended a session or passed a quiz. However, completion doesn’t tell us much about whether someone has successfully transferred the new or reinforced knowledge or skill into their professional practice. It certainly doesn’t tell us if they are actually competent in practice.

This is where two of my favourite evaluation models, The New World Kirkpatrick Model and Don Moore's Model of Outcomes Assessment, have fundamentally shaped how I think about workforce development.

They both reinforce a simple but critical truth:

- Training completion ≠ impact

- Capability = real-world outcome

This article makes a compelling case that we can still use training (wisely) to help build capability. However, it is not solely going to get us to the destination or the desired result of having a capable, confident, and competent workforce.

I will use these two models to encourage L&D teams to think beyond just training. We must plan, implement and evaluate education and non-education or training-based initiatives to move these evaluation models towards capability, performance, and real-world care outcomes.

Kirkpatrick’s Model - Moving Beyond ‘Satisfaction’ to Real Impact

I was introduced to Kirkpatrick’s Model (and then the New World Kirkpatrick Model) early in my career, and it’s been a constant source of common sense ever since.

For those who haven’t encountered it, Kirkpatrick outlines four levels of evaluation for training effectiveness:

- Level 1 Reaction – Did learners engage with the training? Are they satisfied?

- Level 2 Learning – Did they acquire knowledge? Have skills been built or reinforced? Are learners more confident?

- Level 3 Behaviour – Are they applying what they learned in their role?

- Level 4 Results – Is this training actually improving outcomes? Did the targeted or desired outcome occur?

TL+Image+6235C.png)

How Ausmed Applies Kirkpatrick’s Model to Evaluate Impact

At Ausmed, we apply Kirkpatrick’s Model across Levels 1, 2, and 3 to assess the education outcomes of our ANCC-accredited Courses.

At Level 1 (Reaction), we assess learner engagement through completion rates, course ratings, and qualitative feedback. This helps determine whether educators and engagement strategies are effective, informing future course design. We also monitor technical issues, like sound quality and audiovisual glitches, so that they can be fixed and addressed in future development.

At Level 2 (Learning), we measure knowledge change using pre- and post-test questions. This allows us to identify professional practice gaps and evaluate whether an educational activity effectively closed or narrowed them. Learner self-evaluations also provide insight into whether new knowledge was gained or reinforced, helping shape future content.

At Level 3 (Behaviour), we track learners' intent to change practice through post-course reflection questions. If responses indicate low intent to apply learning, it prompts us to reassess whether the content met actual learning needs. Additionally, learners identify how the course will impact their practice, ensuring we continuously refine our approach to align education with real-world applications.

Currently, assessing beyond Level 3 in a practical and scalable way isn’t feasible for all activities. While Level 4 (Results) is the holy grail of evaluation, it’s important to recognise that not every initiative needs to reach the highest level. In many cases, assessing Levels 1 or 2 alone is completely appropriate, depending on the purpose and context of the learning activity.

Where Mandatory Training Sits

Most mandatory or compliance training stops at Levels 1 (Reaction) and 2 (Learning).

We track who attended, possibly collect feedback (“I liked the session”), and maybe even test their knowledge at the end. However, none of that tells us whether staff members are actually applying what they learned to improve care.

There is a clear shift for aged care providers, indicating a need for L&D teams to build capability in assessing mandatory training beyond Level 1 or Level 2. The new conformance-based audit model under the strengthened Aged Care Quality Standards means providers will be assessed at Level 3 (Behaviour) and Level 4 (Results).

Auditors are now asking:

- Is a complaint, feedback item or lagging quality indicator triggering a needs assessment?

- If training is deemed necessary, is the correct target audience (e.g., job role or facility) receiving appropriate training?

- If training is not necessary, is another strategy being implemented?

- Is there a feedback loop evaluating if that activity made a difference?

This means providers must track beyond training completion and move towards measuring actual workforce capability and its impact on care quality. We need to evaluate what we’re doing so we can genuinely understand:

- Are staff demonstrating competency in real-world care settings?

- Are there measurable improvements in care quality and outcomes?

- Is there alignment, consistency and awareness of this system between operational, management and executive teams?

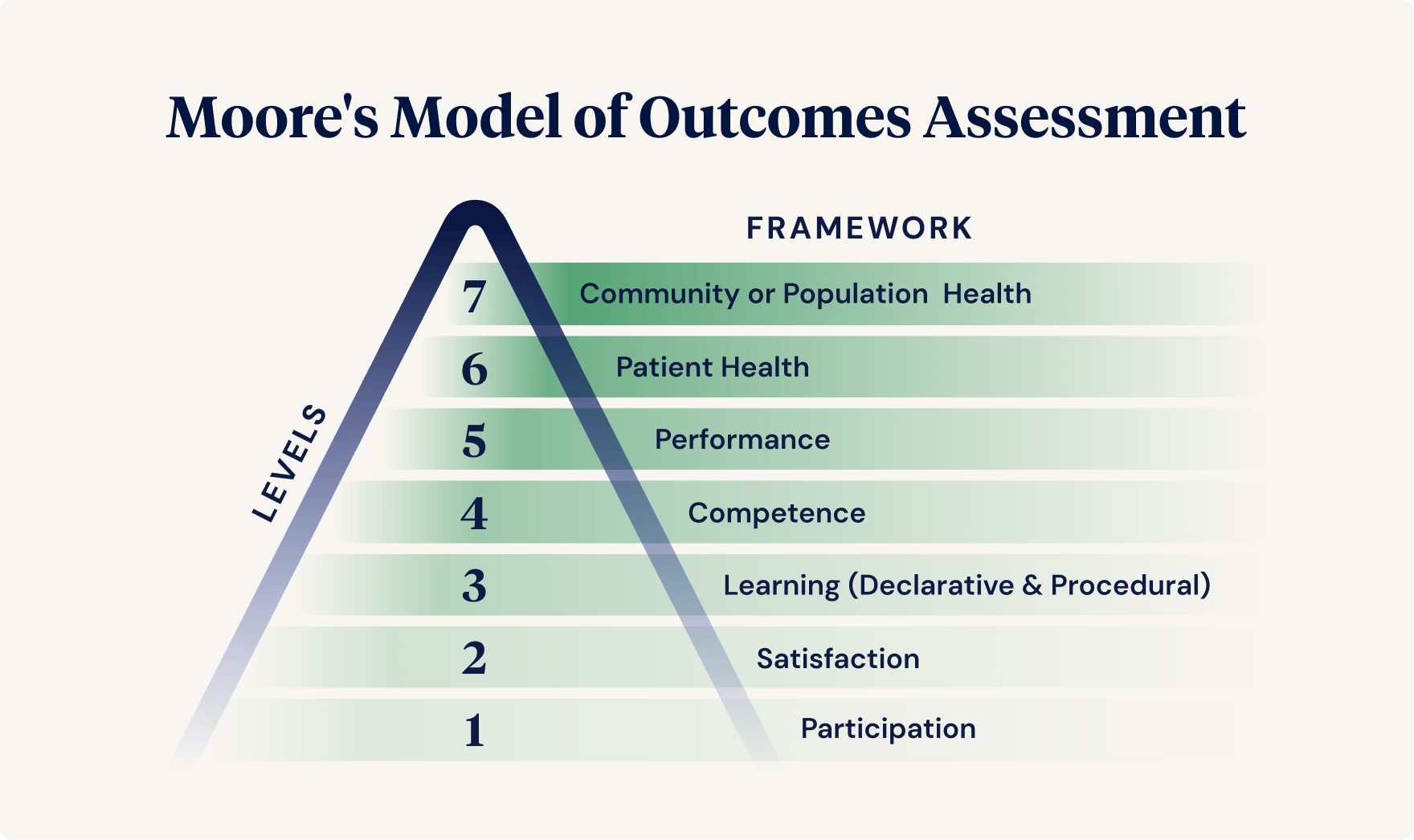

Don Moore’s Model - From Knowledge to Competency and Patient Outcomes

Another model I love is Don Moore's Model of Outcomes Assessment. Like a good name, a good model often has a nickname, sometimes called the Outcomes Pyramid.

Moore takes the same idea as Kirkpatrick, but his model moves through seven levels:

| Level | Framework | Description | Potential Data Sources | Limitation |

|---|---|---|---|---|

| 1 | Participation | Did they attend? | Attendance records or completion rates. | There are no insights into the value of the activity and its impact on learners. |

| 2 | Satisfaction | Did they like it? Were expectations met? | Post-activity questionnaire or evaluation. Can provide valuable feedback on the content integrity, design and subject matter expert. | Limited value in describing the impact of learning. |

| 3A | Learning: Declarative knowledge | The degree to which learners state what the activity intended them to know (learning objectives). “Knows” | Pre-and post-test knowledge comparison of self-reported knowledge gain (or reinforcement of existing knowledge) | There is no guarantee that the translation of knowledge is retained and moves from the learning to the practice environment. |

| 3B | Learning: Procedural knowledge | The degree to which learners state what the activity intended them to do. “Knows how” | Pre-and post-test knowledge comparison of self-reported knowledge gain (or reinforcement of existing knowledge) Case-based scenarios. | Self-report at the time of completion does not predict long-term retention and application of knowledge. |

| 4 | Competence | The degree to which learners demonstrate what the activity intended them to do in a learning environment. “Shows how” | Case-based assessments. Observation in a learning environment. Intent or commitment to change (as this has a high correlation with actual behaviour change). | Measures application of learning to practice in an educational (not real-world) setting. |

| 5 | Performance | The degree to which learners can do what the activity intended for them to be able to do in their practice environment. “Does” | Observation of performance in a care setting. Patient charts, history notes, Self-report of performance. | Limited objective measures to assess the impact on performance. Assessing large cohorts of learners. It is difficult to correlate data with individual efforts. |

| 6 | Patient outcomes | Assesses changes in patient health status using actual patient data. | Quality indicator data. Feedback and complaints data. Satisfaction surveys of staff and individuals. | It may take a long time to reflect changes in health status. Difficulty pinpointing exact learning events with change. |

| 7 | Community or Population Health | Assesses progress toward the ultimate goal of improved community or population health. | Tracks net effect of practice change on target populations. | It may take a long time to reflect changes in health status. Change may be hard to measure. |

While it may not be practical, realistic, or necessary to evaluate every initiative up to Level 7, Moore’s model is valuable because it reinforces the range of evaluation levels possible, highlights potential data sources, and acknowledges their limitations.

Each level has its constraints, but the key takeaway is clear:

Attendance records or completion rates alone provide no real insight into the value of an activity or its impact on learners.

What This Means for Workforce Development

For too long, we’ve measured training in terms of how many people attended or whether they liked it. However, we don’t need more satisfied learners.

We need competent, capable staff who can apply their learning to provide high-quality, person-centred care.

In summary:

- Compliance training is not enough. The focus must shift from "Did they complete training?" to "Can they apply it in practice?"

- Audit readiness is now about capability. Regulators expect providers to demonstrate competency, not just deliver training.

- Training and education initiatives need to be evaluated at higher levels. Providers must measure competency, performance, and care outcomes, not just attendance and satisfaction.

The real goal is workforce capability. If training stops at completion and attendance records, it does not meet the new standards nor expectations of individuals. Workforce capability is about building a system where staff are consistently competent and confident and provide quality care.

Measuring training is one thing.

Measuring impact is everything.

Ensure your organisation is prepared for your first audit under the strengthened Standards.

Want to Keep Learning?

We’re helping providers move beyond compliance and build actual workforce capability.

Reach out to me at zoe@ausmed.com.au if you want to write about this topic or join an upcoming webinar.

Catch up on the Compliance Capability Webinar Series. Designed for educators, L&D professionals, HR teams, and anyone involved in staff induction, onboarding, and training.

Author

Zoe Youl

Zoe Youl is a Critical Care Registered Nurse with over ten years of experience at Ausmed, currently as Head of Community. With expertise in critical care nursing, clinical governance, education and nursing professional development, she has built an in-depth understanding of the educational and regulatory needs of the Australian healthcare sector.

As the Accredited Provider Program Director (AP-PD) of the Ausmed Education Learning Centre, she maintains and applies accreditation frameworks in software and education. In 2024, Zoe lead the Ausmed Education Learning Centre to achieve Accreditation with Distinction for the fourth consecutive cycle with the American Nurses Credentialing Center’s (ANCC) Commission on Accreditation. The AELC is the only Australian provider of nursing continuing professional development to receive this prestigious recognition.

Zoe holds a Master's in Nursing Management and Leadership, and her professional interests focus on evaluating the translation of continuing professional development into practice to improve learner and healthcare consumer outcomes. From 2019-2022, Zoe provided an international perspective to the workgroup established to publish the fourth edition of Nursing Professional Development Scope & Standards of Practice. Zoe was invited to be a peer reviewer for the 6th edition of the Core Curriculum for Nursing Professional Development.